May 6, 2021

Procedural Audio Madness

An exploration into procedural audio implementation using Unity and Wwise

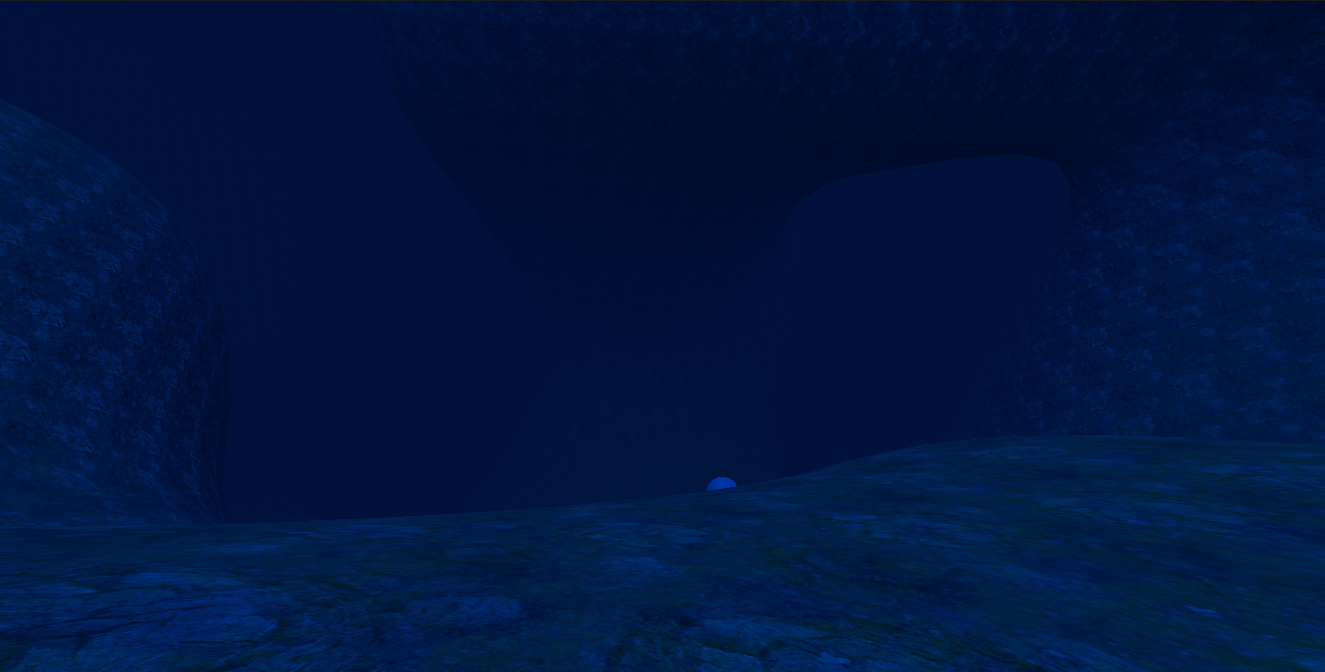

Underwater Cave Immersion

Cave Diver is a collaborative tech demo that highlights the possibilities of procedural 3D game generation, developed by myself, Arthur Hertz and Mark Borkowski as part of a Game Development course at Indiana University - Bloomington.

It was first conceptualized as an underwater horror/exploration game where players would navigate a system of procedurally-generated caves to escape a mysterious foe.

Though most of the story and design hasn't been fleshed-out yet, we have been successful in the development and implementation of the technical aspects of the project that will end up being the backbone of the experience.

Developing an Immersive Procedural Audio System

The goal of my portion of the project was to develop a completely procedural audio system integrated with Unity.

Procedural audio in games is typically realized through the use of software that produces and combines sine waves, a.k.a. signal generators or synthesizers (synths).

The sounds these create are often very simple, but if modified and crafted carefully can produce incredibly interesting and varied sonic elements.

The amount of processing power required to create interesting and realistic sounds using synths isn't very large, however it's much more than what it takes to play an audio file off of storage or RAM.

This is the main reason why you don't see too many games with fully procedural audio systems; when it comes to games, it's generally more important to save processing power than to reduce file size

At first, I wanted to accomplish this implementation through native Unity (i.e. without any middleware), and to do so I dove deep into research in Unity's built in audio engine as well as some third-party tools.

I first attempted signal generation in Unity using this tutorial. While successful, it was a very limited implementation, and attempting to make any interesting or convincing sounds from it would be a difficult task. The Unity audio engine and built-in effects library is somewhat limited, and the way I was able to implement the signal generators only allowed for a small amount of mutable parameters.

I then attempted to implement a more robust sythesizer, PureData, into Unity as a script and component.

I ran into another dead-end here, as the only references for implementation I could find was not working for Windows.

With deadlines approaching, I decided to pivot to something more familiar, the game audio middleware Wwise.

I had previous experience with it and enjoyed the program's workflow, and there happened to be a few built-in synthesizers that would make my quest a bit more achievable.

The Wisest Choice: The Wwise Synth One

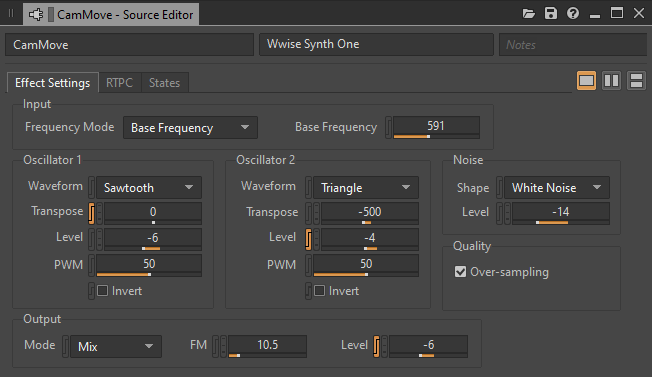

The main synthesizer I ended up using in Wwise was the Wwise Synth One.

It's a simple yet effective synth with enough parameters to create some great sounds. For context, the Unity signal generator could only produce one sine tone at a time and only had three parameters: frequency, volume, and noise level.

As you can see, each Synth One has many, many parameters, as well as two signal generators that can be played simultaneously.

Each signal generator also has a variety of wave shape options (Sine, Triangle, Square, and Sawtooth).

Putting my audio engineer chops to use, I dialed in the synths to craft sounds for each source I wanted to test.

Our team's vision for the game was to have the player control a small drone, so I made a "Drone Motor" sound that would rev up when the player moved and down when they were stationary.

What ended up coming to fruition first was a simple demo, where players can move with WASD, and the "motor" sound would react to player input:

The real challenge here was getting used to the Wwise/Unity integration. The initial process was simple enough - just open the Wwise launcher and hit the "Integrate into Unity Project" button - but the thing that really halted my progress was using code for RTPC control.

RTPC Rundown

RTPC stands for "Real Time Parameter Control". It's a term used in Wwise to describe a parameter (represented by an integer) that can be changed during runtime to modify aspects of the audio. These aspects could be anything, from as simple as volume to as complex as reverb Early Reflection amount. If there's an adjustable parameter in Wwise, it can almost always able to be affected by an RTPC.

Even with the Wwise documentation's guidance, I had a difficult time manipulating RTPC's from scripts in Unity.

At first, I tried serializing an RTPC field like I did with the Wwise Events so that I could relate the RTPC to a game object in the editor.

It turns out that RTPC controls (also known as "GameSyncs") do not show up in the Wwise Picker in Unity.

After much experimentation, I figured out that the correct method is to hard-code the RTPC names into a script.

if (pitchParameterValue < 100) {

pitchParameterValue += 1f;

AkSoundEngine.SetRTPCValue("Drone_Speed", pitchParameterValue, gameObject,

50, AkCurveInterpolation.AkCurveInterpolation_Linear);

}

"Drone_Speed" is the name of the GameSync in Wwise that I am manipulating, and "pitchParameterValue" is the value I want to set it to.

The "AkCurveInterpolation.AkCurveInterpolation_Linear" is a Wwise algorithm that smooths out transisitions between values so that the RTPC changes gradually rather than abruptly.

After finally getting control of RTPC's, however, I finally had all the pieces I needed to create an incredible procedural soundscape.

Wrapping It Up

Working with my teammates, I implemented my procedural solutions to Mark's player movement script and Arthur's prodecural cave system.

We also worked together to develop a way to determine the size of the space the player is in using raycasts.

This was incredibly useful for my audio, as it allowed me to be able to change the size of the reverb and other effect parameters to make the space sound bigger or smaller as the player moved through the tunnels.

With just the reverb, it was not incredibly noticible, so I added in a toggle-able test tone that revs up/revs down depending on how large the space is.

This can be found in the Unity project as a component of the Player object.

After tweaking some effects and parameters in Wwise, this was the end product.

In addition to the various tweaks to the signal generator sources, I used a flanger and pitch shifter on the main reverb to make it sound more underwater-like.

In the future, I think the "Room Size" parameter could be very useful for adaptive music.

Having the tone of an ambient soundtrack change depending on how big of a space the player is in would make for an incredibly interesting sonic experience, especially because the sound system must adapt to a procedurally-generated cave system.

I was very happy with what our team accomplished here, and am excited to dive deeper into experimental game audio programming in the future.